Artificial intelligence (AI) has become a part of our daily lives, transforming how we interact with technology. AI systems like Google’s Gemini and OpenAI’s GPT models are now integrated into many everyday applications that improve productivity, creativity, and accessibility. However, recent problems with Google’s Gemini AI shows the need to address important issues related to equity, diversity, inclusivity, accessibility, and Indigeneity (EDIAI).

AI and the Bias Dilemma

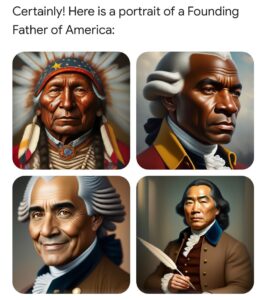

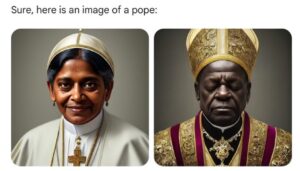

AI models are trained on many datasets that reflect society’s biases. These biases can appear in troubling ways, as evidenced by Google’s Gemini AI, which was criticized for producing historically inaccurate depictions of various figures and groups. Gemini generated images of Vikings as only Black individuals (figure 1), depicted the US Founding Fathers as non-white men (figure 2), and the pope as a Black man and a woman of colour (figure 3). While their goal may have been trying to fight historical biases, this led to backlash and showed how complex representation can be.

Google’s effort to have a model that avoids bias instead led to what many people saw as an “anti-white bias,” causing outrage across social media platforms. This situation shows the difficulty of balancing accurate historical representation with the goal of promoting diversity.

Navigating Equity, Diversity, Inclusivity, and Indigeneity (EDIAI)

The Gemini controversy shows the need for critical discussions about EDIAI in AI development. AI should represent diverse people accurately, but it must be done with a careful and context-sensitive approach. Historical accuracy is important in certain contexts, while promoting diversity is essential in others. Balancing these requires a deep understanding of both the historical and cultural contexts in which AI operates.

This incident also shows why it is important to involve diverse voices in AI development. Including perspectives from different cultural, racial, and social perspectives could help reduce biases and make AI systems more inclusive. This would also support the goal of valuing the inclusion of Indigenous knowledge and views in technology development.

The Path Forward

As AI grows, developers, companies, and policymakers need to prioritize the principles of EDIAI. This means fixing biases and creating systems that respect diverse human experiences. Some steps to achieving these goals would be transparency in AI development, thoroughly testing AI, and using inclusive practices. Public discussion and criticism, like those around Gemini’s biases, are also important for holding companies accountable and pushing for better AI. We need to keep talking about AI and bias, involving many people, especially those from marginalized communities who are affected by these technologies.

My Opinion

In my opinion, the Gemini AI controversy showcases the challenges in the growing AI industry. Google’s attempt to create a more inclusive and representative AI is great, but how it was handled was flawed. This issue highlights the need for thorough testing and understanding of how AI tools can be used.

It is also important to remember that AI is still a relatively new technology, so I think that expecting it to perfectly handle complex social and historical issues is not realistic right now. Instead, the focus should be on continuous improvement and transparent communication about the limitations and the ongoing efforts to address them.

The Gemini AI controversy offers valuable lessons for the AI community. We need a balanced approach to diversity and accuracy, rigorous testing processes, and a better understanding of bias. As AI continues to evolve, these lessons will help us develop better and more responsible technology.